The Ethical Use of Technology in the Classroom

Human history is often divided into before and after fire, as harnessing fire arguably marked the beginning of civilization. In many ways, fire was humanity’s first great technological leap. It set the stage for metallurgy, agriculture, and even modern industry. Some historians argue that without fire, civilization as we know it would not have been possible. As the adage goes; Fire can be a good servant but a bad master if left unchecked. Like fire, technology is neither inherently good nor bad; its impact depends entirely on how it is used.

Technology is transforming the classroom, but with great power comes great responsibility. The modern educator must navigate a digital landscape where students have instant access to information, AI tools that can write essays in seconds, and distractions lurking behind every open browser tab. The question is not whether technology should be used in education—it’s already deeply embedded—but rather how we use it ethically to support learning while maintaining academic integrity.

Take, for example, the rise of generative AI tools, in one sense, they are incredible aids in helping students understand complex topics, summarize research, and even practice foreign languages. But where do we draw the line between assistance and academic dishonesty? If a student submits an AI-generated paper without understanding the content, have they really learned anything? More importantly, does this challenge the very essence of what it means to be educated?

Schools now face a dilemma: How can we harness technology’s benefits while ensuring students develop critical thinking, creativity, and independent problem-solving skills? Some universities have embraced AI as a learning companion, teaching students how to use it ethically rather than banning it outright. Others have reinforced traditional assessment methods, requiring handwritten essays or oral exams to verify understanding.

Surveillance – Striking a Balance Between Safety and Privacy

In an era where technology is deeply woven into education, surveillance tools have become increasingly common in classrooms. Schools use AI-powered plagiarism detectors, remote proctoring software, and even facial recognition systems to monitor students’ activities. While these tools are often justified as necessary for maintaining academic integrity and campus security, they raise an important ethical question: How much monitoring is too much?

Take for instance, a remote proctoring software that tracks students’ eye movements, keystrokes, and background noise during an online exam. While intended to prevent cheating, such tools can feel invasive, treating students as suspects rather than learners. Some students have reported increased anxiety and feelings of distrust, raising concerns about the psychological impact of constant monitoring. Moreover, AI-driven surveillance systems are not infallible, facial recognition software has been criticized for biases that disproportionately misidentify people of color, leading to ethical and equity concerns.

Many educational platforms gather vast amounts of personal information. On one hand, this data helps greatly ease administrative pain points and provides insights that can improve teaching and learning. On the other hand, there is the issue of who has access to this data and how can its misuse be prevented. Transparency is everything. Students and parents have the right to know what data is being collected and for what purpose. Without clear policies, there is a risk of data misuse, security breaches, or even commercialization of student information. Schools must adopt transparent policies that clearly define the limits of surveillance, ensuring that monitoring serves a legitimate educational purpose rather than simply exerting control.

AI in the Classroom

Artificial intelligence has taken the classroom by storm, offering students and educators unprecedented tools for learning. Educators must navigate this ethical gray area carefully. Technology is not the enemy, and students will inevitably encounter AI in their academic and professional lives. Even here at Adiutor, we do employ the use of AI in certain aspects of our day-to-day operations. Instead, the ethical approach is to teach students how to use AI responsibly. This means setting clear guidelines: AI can assist with brainstorming and revision, but cannot replace critical thinking or independent problem-solving.

There’s also the issue of bias and misinformation. AI models are trained on vast datasets spanning multiple fields of study, but they are not perfect. They can generate incorrect information (hallucinations), reinforce certain biases due to data they were trained on, or produce misleading conclusions. Students must learn to critically evaluate AI-generated content, just as they would fact-check any other source. If we fail to teach digital literacy, we risk creating a generation of students who accept algorithmic outputs without question; a troubling prospect for academia and society.

Digital Citizenship

Schools are encouraged to integrate digital ethics into the curriculum, teaching students not just how to use technology, but also how to "question it". This includes:

- Academic Integrity – Helping students distinguish between using technology for assistance versus academic dishonesty.

- Data Privacy – Teaching students how their personal information is collected, shared, and sometimes exploited.

- Critical Thinking – Encouraging students to question the validity of online information and recognize biases in digital content.

- Online Behavior – Promoting respectful communication, responsible social media use, and awareness of cyberbullying.

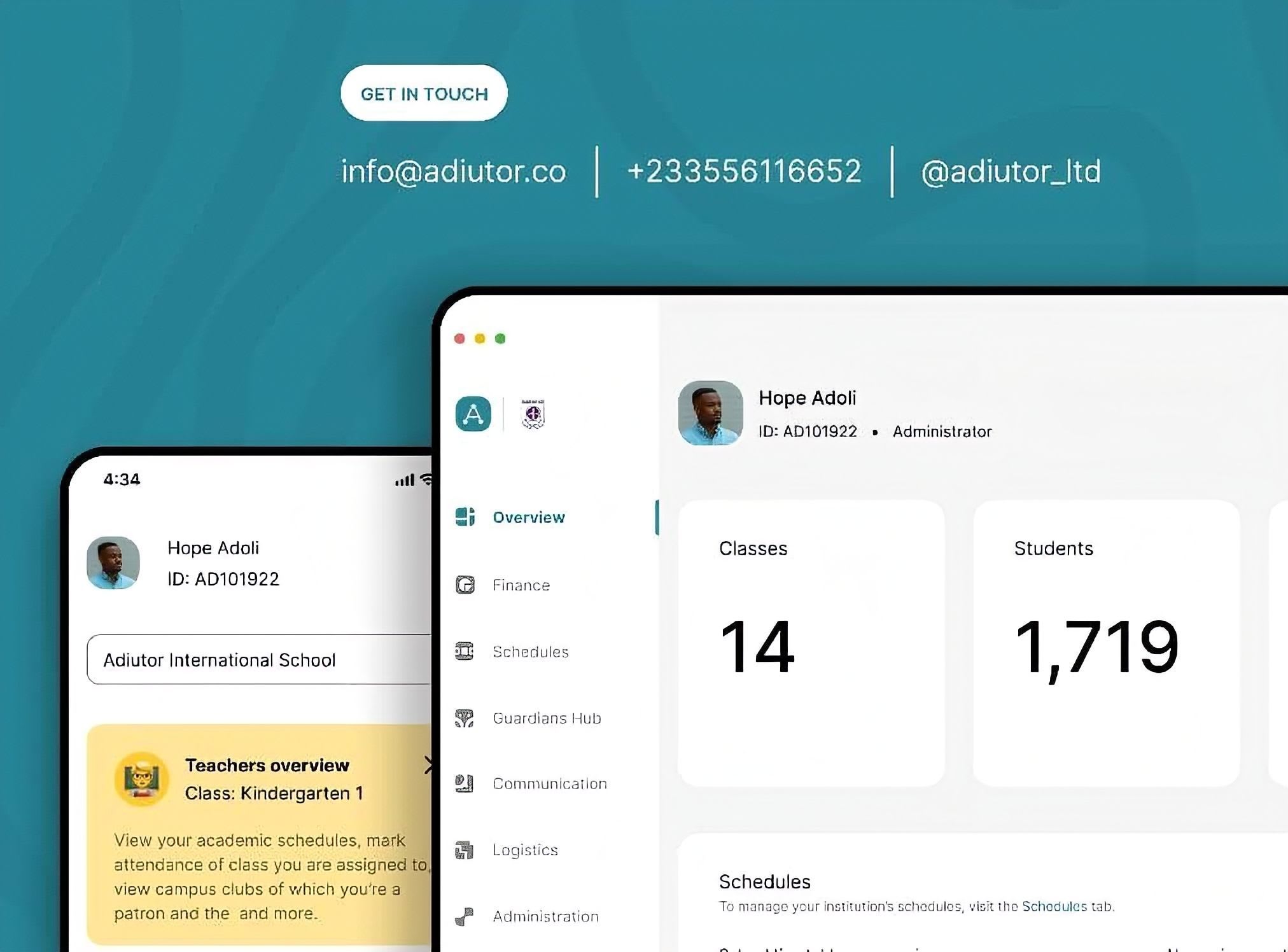

Adiutor

Adiutor means "helper" - we do just that, by taking a load of your school administration and helping you focus on what matters most: the kids.

References

Floridi, L. (2013). The ethics of information. Oxford University Press.

Himma, K. E., & Tavani, H. T. (Eds.). (2008). The handbook of information and computer ethics. John Wiley & Sons.

Turkle, S. (2011). Alone together: Why we expect more from technology and less from each other. Basic Books.

Boddington, P. (2017). Towards a code of ethics for artificial intelligence. Springer.

Selwyn, N. (2019). Should robots replace teachers? AI and the future of education. Polity Press.

Cotton, D. R., Cotton, P. A., & Shipway, J. R. (2023). Chatting and cheating: Ensuring academic integrity in the era of ChatGPT. Innovations in Education and Teaching International, 60(1), 1-14.

Selwyn, N., & Facer, K. (2013). The politics of education and technology: Conflicts, controversies, and connections. Palgrave Macmillan.

Zuboff, S. (2019). The age of surveillance capitalism: The fight for a human future at the new frontier of power. PublicAffairs.

Lyon, D. (2018). The culture of surveillance: Watching as a way of life. Polity Press.

Williamson, B. (2021). Big data in education: The digital future of learning, policy, and practice. SAGE.